shortcomings of one-hot encoding & n-gram feature in text classification

In this tutorial, I have tried to describe the basic limitation of one-hot encoding and n-grams feature for finding text similarity.

one hot encoding

For text encoding, there are few techniques available one-hot encoding is one of them. one hot encoding helps to map a word in one-hot vector. Let's think about two similar sentences " she was a beautiful woman" and "she was a wonderful woman". If we construct a vocabulary (let's call it V) from those sentences, it would have V = {she, was, a, beautiful, wonderful, woman}. Now we can create a one-hot vector each of the words in V. The length of the one-hot encoded vector would be equal to the size of (V=6). So for one-hot encoding, we will consider a vector of zeros except for the representation of the vocabulary word index which will be considered as one. The encoding below would be explained better.

-

she = [1,0,0,0,0,0,0]

was = [0,1,0,0,0,0]

a = [0,0,1,0,0,0]

beautiful = [0,0,0,1,0,0]

wonderful = [0,0,0,0,1,0]

woman = [0,0,0,0,0,1]

In one hot encoding representation, all of the words are independent of each other. If we try to visualize this encoding, we can think of a 6-dimensional space, Where one word occupies one of the dimensions and can not make any projection along with other dimensions. That means "beautiful" and "wonderful" this two words considered as different as rest of the other words, which is not true. If Vocabulary size increase it will make a huge sparse matrix which will consume more memory and space.

N-grams

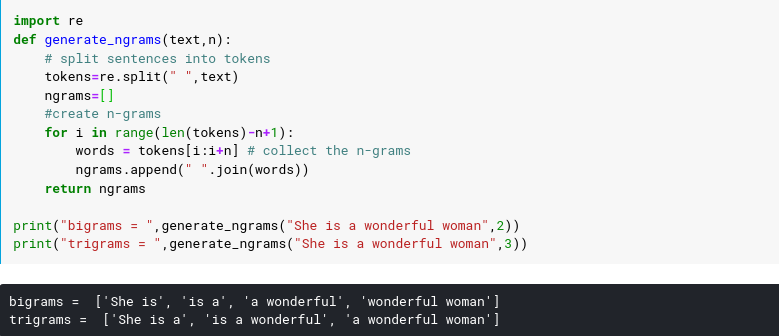

n-grams can be used to represent the relation between words in a document. n-grams help to measure the number of co-occurring words within a window. For example, for the sentence "she is a wonderful woman" (if N=2 known as bigrams) then the n-grams would be

- she is

- is a

- a wonderful

- wonderful woman

we have 4 n-grams in this case.

If N = 3 known as trigrams then n-grams would be:

- she is a

- is a wonderful

- a wonderful woman

So we have 3 n-grams in this case.

n-grams of given sentence K would be X - (N-1)

Where, X = number of words in given sentence K

The major drawback of the n-grams feature is increasing data sparsity, which means more data needed to successfully train a statistical model. Also, n-grams can not resolve the input feature space, which increases exponentially with the n-grams feature.